- #INSTALL APACHE SPARK ON VMWARE HOW TO#

- #INSTALL APACHE SPARK ON VMWARE INSTALL#

- #INSTALL APACHE SPARK ON VMWARE SOFTWARE#

- #INSTALL APACHE SPARK ON VMWARE LICENSE#

- #INSTALL APACHE SPARK ON VMWARE WINDOWS#

Start the slave from your Spark’s folder with. sbin/start-master.sh.Ĭheck if there were no errors by opening your browser and going to you should see this: Start the master from your Spark’s folder with. a Docker container-based installation running Apache TomEE (Apache Tomcat. # -XX:+PrintGCDetails -Dkey=value -Dnumbers="one two three" In this setup Azure AD is identified as the Identity Provider and Okta as the. # This is useful for setting default environmental settings. # Default system properties included when running spark-submit.

#INSTALL APACHE SPARK ON VMWARE LICENSE#

# See the License for the specific language governing permissions and # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # distributed under the License is distributed on an "AS IS" BASIS,

#INSTALL APACHE SPARK ON VMWARE SOFTWARE#

# Unless required by applicable law or agreed to in writing, software # (the "License") you may not use this file except in compliance with Use Velero to restore the backed-up PVs on the destination cluster. Use Velero to back up the PersistentVolumes (PVs) used by the deployment on the source cluster.

#INSTALL APACHE SPARK ON VMWARE INSTALL#

# The ASF licenses this file to You under the Apache License, Version 2.0 If you download the source code from Apache spark org, and build with command build/mvn -Pyarn -Phadoop-2.7 -Dhadoop.version 2.7.0 -DskipTests clean package There are lots of build tool dependency crashed. These are the steps you will usually follow to back up and restore your cluster data: Install Velero on the source and destination clusters. # this work for additional information regarding copyright ownership. # Licensed to the Apache Software Foundation (ASF) under one or more Navigate to the Apache Spark’s folder with cd /path/to/spark/folder.Įdit conf/nf file with the following configuration: 1 These commands are valid only for the current session, meaning as soon as you close the terminal they will be discarded in order to save these commands for every WSL session you need to append them to ~/.bashrc.Ĭreate a tmp log folder for Spark with mkdir -p /tmp/spark-events. Open WSL either by Start>wsl.exe or using your desired terminal.

#INSTALL APACHE SPARK ON VMWARE WINDOWS#

Apache Sparkĭownload Apache-Spark here and choose your desired version.Įxtract the folder whenever you want, I suggest placing into a WSL folder or a Windows folder CONTAINING NO SPACES INTO THE PATH (see the image below). Install maven with your OS’ package manger, I have Ubuntu so I use sudo apt install maven. Install JDK following my other guide’s section under Linux, here. Latest Java Development Kit (JDK) version.

It gathers stuff from Couchbase and does the calculations. We are not actively using Spark AI libraries at this time, but we are going to use them. Use Cloud Shell to submit a Java or PySpark Spark job to the Dataproc service that writes data to, then reads data from, the HBase table. Create an HBase table using the HBase shell running on the master node of the Dataproc cluster. Analytics have become a major tool in the sports world, and. Create a Dataproc cluster, installing Apache HBase and Apache ZooKeeper on the cluster. All code and examples from this blog post are available on GitHub.

#INSTALL APACHE SPARK ON VMWARE HOW TO#

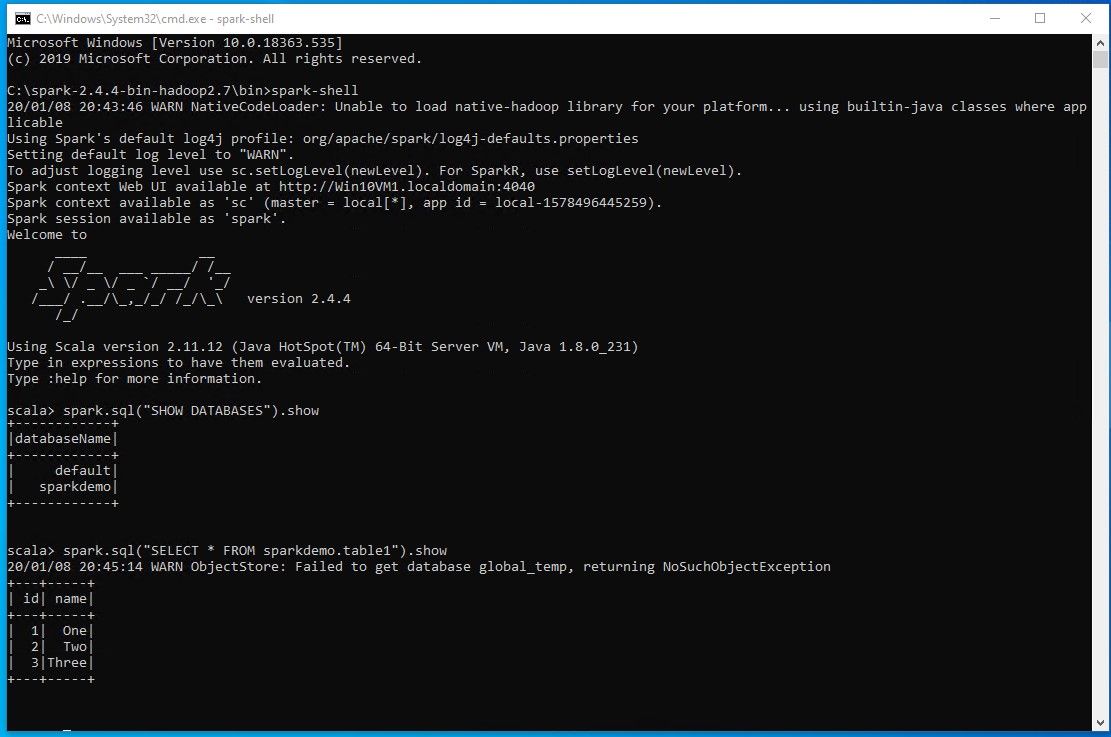

Test the Spark installation by running the following compute. Apache Spark has become a common tool in the data scientist’s toolbox, and in this post we show how to use the recently released Spark 2.1 for data analysis using data from the National Basketball Association (NBA). Spark is mainly used for aggregations and AI (for future usage). We can also explore how to run Spark jobs from the command line and Spark shell. In this project, we are using Spark along with Cloudera. In front of Spark, we are using Couchbase. We just finished a central front project called MFY for our in-house fraud team.

0 kommentar(er)

0 kommentar(er)